James Duvall IV

AI in Computer Games

Senior Seminar

4/29/04

“Grenade! Move! Move! Move!” I was playing Half-Life and I had just experienced a mind blowing experience. A grenade I had lobbed at a group of enemies was recognized and assessed by a non player character (N.P.C.). One enemy in turn shouted this message in order to warn his compatriots. I sat at my computer amazed. Never once had I witnessed a character in a computer game make a seemingly human like response. By understanding how to implement Artificial Intelligence (A.I.) in computer games we can have an appreciation of the difficulty it is to simulate intelligence. Topics I will cover are: Importance of A.I. in computer games, Rule Based Systems, Scripting, Genetic Algorithms and Neural Networks. Implementing A.I. in computer games can be costly (time and computing power) but the rewards are enormous.

A.I. is a very important part in the gaming experience. It is essential on many levels. The ability for an agent (a computer controlled character) to move about in its environment or interact with the player or compete against the player are all actions that rely on A.I.. Imagine playing a game where your enemies ran into corners and continued to run in place or worse not even reacting to your presence. The importance of intelligent enemies is never so apparent until you have run into those previous conditions. When people talk about A.I. and computers most think of the infamous duo of man vs. computer over a nice game of chess. The solo purpose of the computer, called Big Blue, was to beat its opponent at this game. It succeeded. Of course to say Big Blue was intelligent was laughable. If a person was to feed it any information outside of chess moves it would not have a response. But this then creates a conflict, what would determine if a computer was intelligent? For this paper I have decided to define intelligence as the emulation of human behavior and decision making. I use this definition with computer games only. A.I. is an essential part in any computer game.

The probably most used and well known way to implement A.I. is through the use of Rule Based Systems (R.B.S.). “R.B.S. are a simple but successful AI technique. This technology originates in the early days of AI (mid-twentieth century), when the intention was to create intelligent systems by manipulating information,” (Pg 124, Champandard). R.B.S.s usually are a collection of IF THEN statements. Meaning IF a certain input meets the criteria in the IF statement THEN execute the instructions following the THEN statement. Once the action (movement of an agent, a change in the agent’s memory, an agent switches to a different weapon, etc…) has been executed it either loops back through the IF THEN statements or terminates. There are two methods of R.B.S., Forward-Chaining and Backward-Chaining. Forward-Chaining (Diagram 1) is the method where the data is processed through the rules to end at a goal. Whereas the Backward-Chaining (Diagram 2) is the opposite, starting with the goal you see what data would cause it. A good example of these two is given by author James Freeman-Hargis:

Forward-Chaining:

Assertions (Working Memory):

A1: runny nose

A2: temperature=101.7

A3: headache

A4: cough

Rules (Rule-Base):

R1: if (nasal congestion)

(viremia)

then diagnose (influenza)

exit

R2: if (runny nose)

then assert (nasal congestion)

R3: if (body- aches)

then assert (achiness)

R4: if (temp >100)

then assert (fever)

R5: if (headache)

then assert (achiness)

R6: if (fever)

(achiness)

(cough)

then assert (viremia)

Execution:

1. R2 fires, adding (nasal congestion) to working memory.

2. R4 fires, adding (fever) to working memory.

3. R5 fires, adding (achiness) to working memory.

4. R6 fires, adding (viremia) to working memory.

5. R1 fires, diagnosing the disease as (influenza) and exits, returning the diagnosis

Backward-Chaining:

Using the same assertions and rule table the execution would be:

Execution:

1. R1 fires since the goal, diagnosis(influenza), matches the conclusion of that rule. New goals are created: (nasal congestion) and (viremia) and backchaining is recursively called with these new goals.

2. R2 fires, matching goal nasal congestion. New goal is created: (runny nose). Backchaining is recursively called. Since (runny nose) is in working memory, it returns true.

3. R6 fires, matching goal viremia. Back-chaining recursion with new goals: (fever), (achiness) and (cough)

4. R4 fires, adding goal (temperature > 100). Since (temperature = 101.7) is in working memory, it returns true.

5. R3 fires, adding goal (body-aches). On recursion, there is no information in working memory nor rules that match this goal. Therefore it returns false and the next matching rule is chosen. That rule is R5 which fires, adding goal (headache). Since (headache) is in working memory, it returns true.

6. Goal (cough) is in working memory, so that returns true.

7. Now, all recursive procedures have returned true, the system exits, returning true: this hypothesis was correct: subject has influenza.

Also an important thing is that sometimes data or assertions might map to multiple rules. There are many ways to handle this. The first is proceeding with the first rule found. Second is selecting the best rule possible. Third is the rule with the most specific, meaning the rule with more data inputs. Fourth uses memory of a previously used rule and prevents the rule to be repeated a second consecutive time. Fifth is select a possible rule at random (Champandard). The creation of the rules begins with an understanding of the problem. From there the designer would enlist an expert in the field of the problem to create the rules to achieve certain goals. It is that simple. R.B.S. have many advantages:

§ Simplicity – Individual rules have simple syntax and express knowledge in a natural fashion. Rule-based systems are based on human reasoning, which implies it is straightforward for experts to understand their functioning. The knowledge is also defined in an implicit fashion, saving much time and effort.

§ Modularity – Production rules capture knowledge in a relatively atomic fashion. Statements can be combined and edited independent of each other. This makes R.B.S. simple to extend.

§ Flexibility – By specifying knowledge in a relatively informal fashion (not as logic), the data can be manipulated to provide the desired results. It is also easy to add more symbols in the working memory, or even improve the knowledge representation used.

§ Applicability – Because most problems can be formalized in a compatible way (for instance, symbols). R.B.S.s will often be a possible solution - and one that works! The scope of applicable domains ranges far, from simulation to problem solving.

(Pg 136, Champandard)

Of course there are also disadvantages. R.B.S.s can become very inefficient as the amount of rules grow. In the case where the designer needs to define the rules sometimes an expert is not available. Another problem with R.B.S.s is it is difficult to understand the behavior by just looking at the rules. An example of a R.B.S. used in a game can be seen in Pac Man. The ghosts are being controlled by these rules. Its assertions could be direction, walls around it, if a cherry has been eaten. There are actually many more rules and assertions controlling the game itself. R.B.S.s look to be on their way out with computer games for the most part as new technologies emerge. They are still though a very important staple in computer game A.I..

Scripting is an extension of R.B.S.s as it allows for a person to create the actions an agent must follow when presented with certain conditions. Scripting languages are used for this process. Some scripting languages are Python, LISP and Lua. Scripting languages are not compiled and are usually less complicated to write then a standard programming language. They are interpreted at runtime. Also they are easily modifiable. Being able to be interpreted at runtime saves a lot of time in the development phase. To compile a major project takes a lot of time. When trying to debug an A.I. problem the developer does not need to re-compile the project after he changes the script thus saving on time. Scripting is used all the time. In the game Quake II a voting system determines what weapon a bot (an agent) should use for a given situation (fleeing, engaging, acquisition of heath, etc…). A vote is determined by its surroundings, enemy distance, movement, number of enemies, etc… Those votes are then multiplied by the value of each weapon which creates a final fitness. The most fit weapon is chosen. When scripting a lot of experimentation is involved. Changing values just a bit could cause your bot to become Superman or a slug. Scripting has also been used in Real Time Strategy games like Age of Empires. In AOE the player could change the way the computer played by simply changing values in a text file. This provided great A.I. customizability. Scripting is a great tool that will be around for many years to come.

Genetic Algorithms are created through the same process in which nature operates, evolution. They are algorithms that are commonly used on problems without a definitive answer. A good problem that is commonly found in computer games is path finding. Author Mat Buckland (1) gives a good example of a loop of a G.A.:

Loop until a solution is found:

1. Test each chromosome to see how good it is at solving the problem and assign a fitness score accordingly.

2. Select two members from the current population. The probablility of being selected is proportional to the chromosome’s fitness – the higher the fitness, the better the probability of being selected. A common method for this is called Roulette wheel selection.

3. Dependent on the Crossover Rate, crossover the bits from each chosen chromosome at a randomly chosen point.

4. Step through the chosen chromosome’s bits and flip dependent on the Mutation Rate.

5. Repeat steps 2, 3 and four until a new population of one hundred members has been created.

End Loop

Each loop through the algorithm is called a generation (steps 1 through 5).

(Pg 99)

Two main problems arise in a G.A.. First is the selection of the parent chromosomes. There are many methods to solving this problem. These are some:

- Roulette Wheel – Think of this as a pie whereas the chromosome that has a higher fitness function has a larger portion of the pie. Meaning on the average a chromosome that has a higher fitness function should be picked over one with a lower one.

- Elitism – This ensures that a given percentage of the most fit chromosomes are picked.

- Tournament – An n number of chromosomes are chosen from a population. The most fit are then selected to join the new population. Note that even though it joins the new population it remains in the old one and thus can be selected again. This process continues until the new population has reached its maximum.

The second problem is the types of mutation (Examples are Diagram 3):

- Scramble Mutation – Taking bits from a certain range and simply moving them to random positions within that range (of course only moving to an unoccupied space).

- Displacement Mutation – Taking bits from a certain range, extracting them and then reinserting them somewhere along the sting of bits.

- Insertion Mutation – Closely resembles the previous but instead of a range only does it with one bit.

- Inversion Mutation – This takes a range of bits and reverses them in that given range.

Concerning both of these problems each solution has its strengths and weaknesses. Sadly enough the only way to truly find which works best for a given problem is trial and error. Other important values to tinker with are the crossover rate percentage (the possibility that two parents crossover their bits or else they become the children), mutation rate percentage (the possibility that a given offspring will mutate), population size, and chromosome length (length of string of bits). As Mat Buckland (1) states, “In the end, choosing these values comes down to obtaining a ‘feel’ for genetic algorithms, and you’ll only get that by coding your own and playing around with the parameters to see what happens,” (Pg 112). G.A.s can be very successful and applicable to many problems. WarCraft is a Real Time Strategy game. The player creates and moves his armies to defeat his opponent. In order for him to send his troops each character has to be able to navigate the terrain. This is where path finding becomes important. Loosing troops because they cannot get around a building or a tree is maddening. Path finding even in well renowned games like WarCraft still have difficulty. Hopefully G.A.s will help solve that problem. G.A.s are seemingly the wave of the future, especially when they are coupled with the next topic Neural Networks.

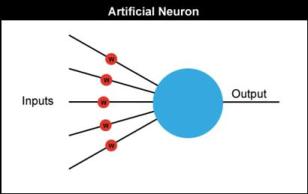

Neural Networks are our attempt to recreate the workings of the human brain. The brain contains 10 x 10 neurons. These neurons are interconnected and can communicate with each other over their synapses. The similarity to neurons to computers is that neurons either fire or do not fire (1 or 0). The goal of N.N.s is to duplicate some characteristics of the brain like learning with supervision, process information efficiently and be able to generalize. We begin with the digital neuron. This neuron (Diagram 4) takes in multiple inputs and one output. In the human brain neurons are connected to each other in no particular order. In the case of digital neurons we are dealing with a feed forward system. That is defined as a system of neurons set up in layers whereas their outputs are the inputs for the next layer of neurons. No layer of neurons can connect to a previous neuron (This is for simplicity). Every input has a weight. These weights are multiplied by 0 or 1 (depends if the input is firing or not) then they are added together to get the total activation. If this total is higher than the threshold (usually 1) then the neuron fires (outputs a 1). The number of neurons and the number of layers depend on each problem. One thing to consider is the more neurons added the more costly the N.N. becomes. Once the N.N. is built the developer conducts supervised learning. This entails feeding it certain inputs and adjusting the weights to get a desired output. The interesting thing is once it can recognize a certain object it is able to generalize that object. Meaning if it saw a beach ball and it had learned using tennis ball it would be able to interpret that they were balls. The application of N.N.s concerning games is creating smarter, better opponents. By integrating G.A.s from the previous paragraph we can weed out the least efficient N.N.s and hopefully produce a N.N. which creates an intelligent agent. N.N.s have not been implemented in a lot of games as it is an emerging technology. The reason why it is covered in this paper is the belief that they will be used more in the future.

A.I. in computer games can be implemented many different ways. This paper covers implementations of possible A.I. systems. They include: Ruled Based Systems, Scripting, Genetic Algorithms and Neural Networks. Hopefully in the near future gamers will be unable to distinguish playing against a human and playing against the computer.

Diagrams

Diagram 1

(Freeman-Hargis)

Diagram 2

(Freeman-Hargis)

Diagram 3

Scramble Mutation:

12345678

12365748

Displacement Mutation:

12345678

13452678

Insertion Mutation:

12345678

12356478

Inversion Mutation:

12345678

12376548

Diagram 4

( Buckland (2) )

Works Cited

(1)Buckland, Matt, “AI Techniques for Game Programming,” Premier Press, 2002, ISBN 1-931841-08-X

(2)Buckland, Matt, “Neural Network Tutorial,” Unknown, http://www.ai-junkie.com/nnt1.html

Champandard, Alex, “AI Game Development,” New Riders, 2004, ISBN 1-5927-3004-3

Freeman-Hargis, James “Rule-Based Systems and Identification Trees,” 11/23/03, http://ai-depot.com/Tutorial/RuleBased.html

Woodcock, Steven, “Game AI Page,” 4/14/04, http://www.gameai.com/