(rev. May 02, 2015)

Notes On Chapter Twenty-Five

-- TCP: Reliable Transport Service

- 25.0 Study Guide

- Understand that TCP is a connection-oriented, Point-to-Point, Reliable,

Full Duplex, Stream Interface protocol with reliable connection

and shut down. Understand some of the details of each thing in

the foregoing list of characteristics.

- Understand what problems have to be solved to make TCP reliable:

unreliable communication, end system reboot, heterogeneous end systems,

and congestion.

- Understand how sequencing handles duplication and out-of-order delivery.

- Understand how positive acknowledgement with retransmission handles the

problem of lost packets.

- Understand how unique connection identifiers are used to avert replay

errors.

- Understand how sliding window flow control allows data to be sent

at a relatively high rate, but not so fast that receivers are

overwhelmed.

- Remember that TCP monitors round-trip delay time and adapts its

retransmission timeout values accordingly.

- Remember details of how TCP's back off and slow start methodology

handles congestion (see notes on section 25.13).

- 25.1 Introduction

- Transport protocols in general

- TCP in particular

- Services provided by TCP

- How TCP provides reliable delivery

- 25.2 The Transmission Control Protocol

- The Transmission Control Protocol, TCP provides reliable

transport service on the Internet.

- An application using TCP can treat the Internet pretty much like an

abstract I/O system - for example, like a file system. That is not

the case for applications that use a best-effort connectionless

service such as UDP. Therefore TCP makes things easier for network

application programmers.

- 25.3 The Service TCP Provides to Applications

- Seven major features of TCP:

- Connection Oriented: requires connection creation, use,

and tear-down.

- Point-to-Point Communication: a connection has exactly

two endpoints.

- Complete Reliability: ensured complete in-order

delivery

- Full Duplex Communication: either party can send data any

time.

- Stream Interface: data sent and received as a continuous

stream.

- Reliable Connection Startup

- Graceful Connection Shutdown: TCP ensures that all data

is delivered and both sides are in agreement before their

connection is shut down.

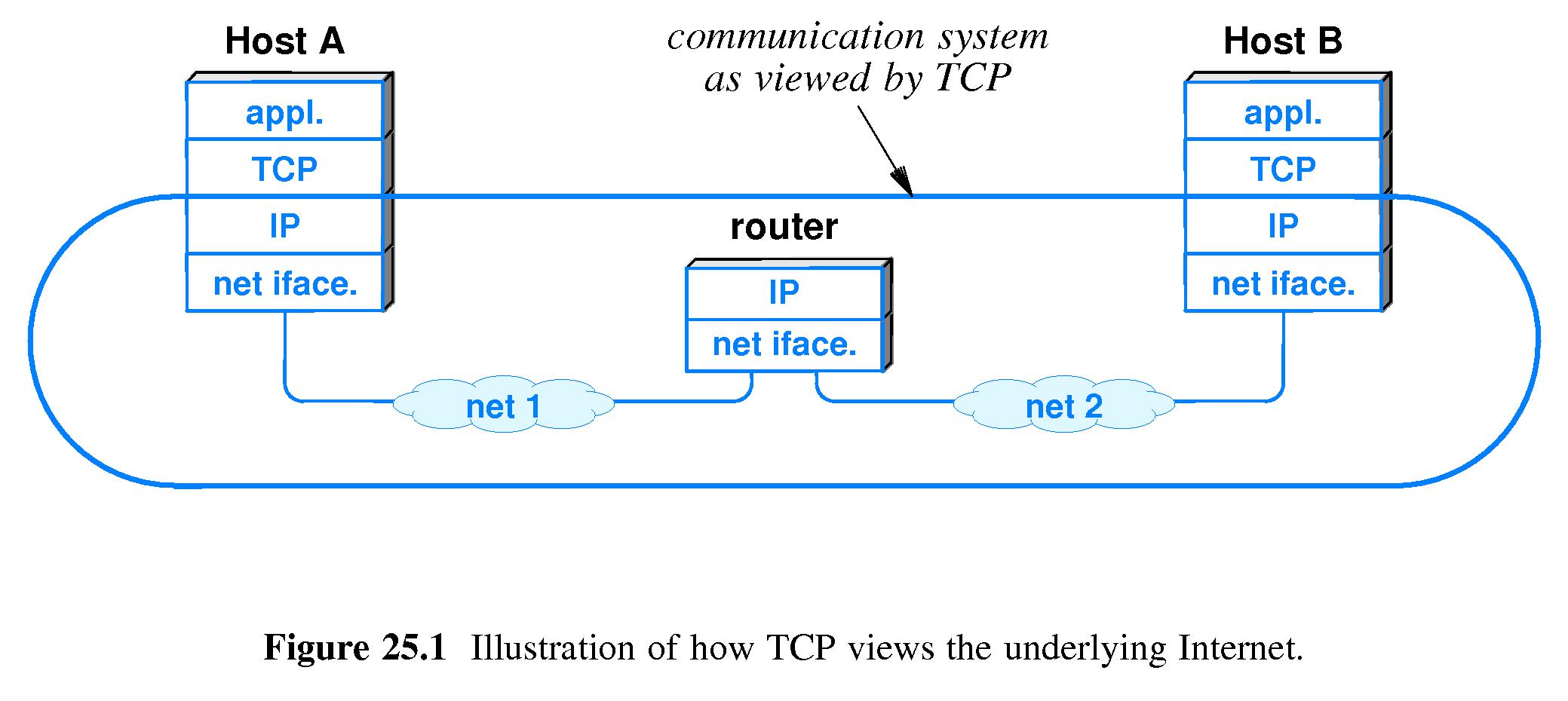

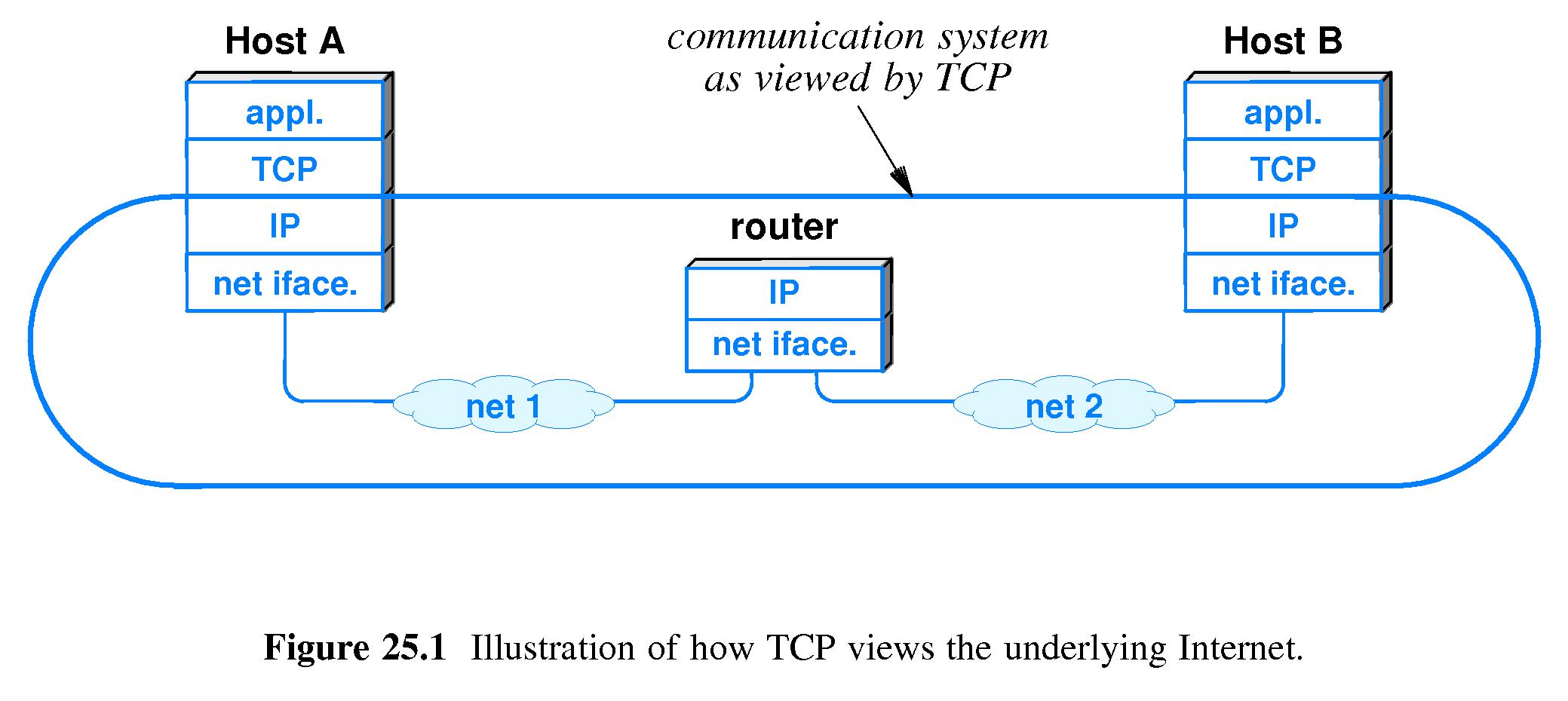

- 25.4 End-to-End Service and Virtual Connections

- Refer to Figure 25.1.

- TCP is an end-to-end protocol - it provides communication

between two applications (processes).

- TCP is connection-oriented but the connections are virtual.

- A TCP segment (packet) travels across a physical network encapsulated

in an IP datagram.

- 25.5 Techniques that Transport Protocols Use

- The Major Potential Problems:

-

- Unreliable Communication Messages sent across the

Internet are subject to loss, duplication, corruption, delay, and

out-of-order delivery

- End System Reboot

Communicating hosts can crash and reboot at any time.

- Heterogeneous End Systems different hosts may differ

immensely in the speeds that they can send and receive data.

- Congestion in the Internet Intermediate switches

and routers can be overrun with data.

- Question: How does TCP detect and recover from such problems?

- 25.5.1 Sequencing to Handle Duplicates and Out-of-Order

Delivery

- A sender assigns a sequence number to each TCP segment.

- Receivers use the sequence numbers to make sure that they hand

off segments in order to the next layer up. (The receiver

stores the sequence number of the last packet received in order,

plus a list of packets that have arrived out of order.)

- Receivers also detect duplicate packets by checking sequence

numbers.

- 25.5.2 Retransmission to Handle Lost Packets

- TCP uses positive acknowledgement with retransmission.

- A receiver of a TCP segment sends a short message of

acknowledgement (an ACK) back to the sender.

- The sender has the responsibility to make sure that each segment

is received intact.

- The sender keeps track of how much time has elapsed after

it sends each segment. The sender will retransmit the segment

if it does not receive an ACK within a certain time limit.

- The sender will continue in this manner, retransmitting a

packet again and again if it continues to be unacknowledged.

- After a certain number of retransmissions, the sender will

eventually 'give up' and "declare that communication

impossible."

- It should be noted that retransmission can result in duplication

of packets - which underscores the importance of the fact that

TCP is able to deal with duplicate packets.

- 25.5.3 Techniques to Avoid Replay

- Replay: refers to the danger that a long-delayed packet

from a previous connection will be accepted as part of a later

conversation, and the correct packet bearing the same sequence

number discarded as a duplicate.

- If a protocol assigns a unique identifier to each connection,

and includes the identifier in each packet, then replays can be

detected.

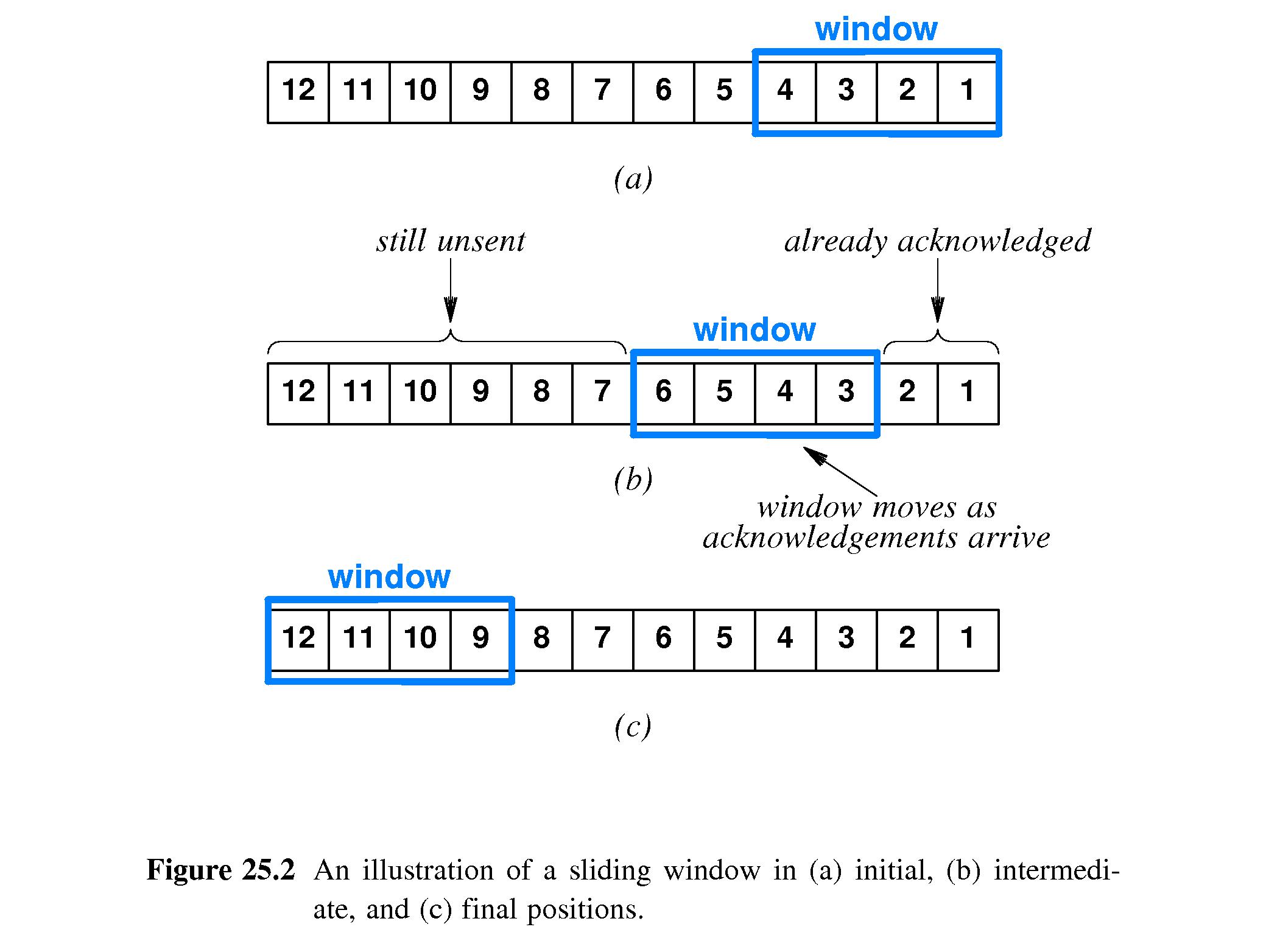

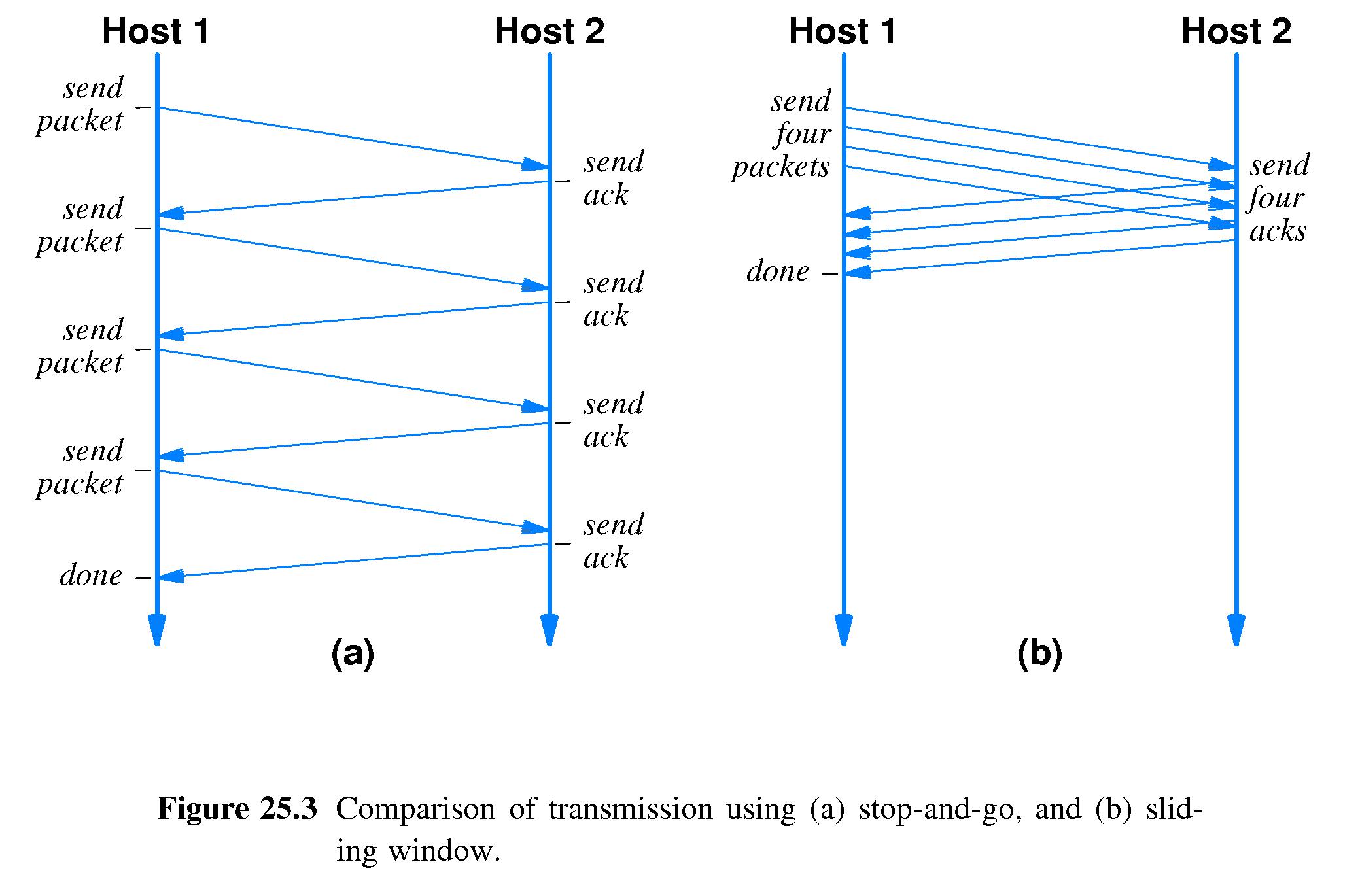

- 25.5.4 Flow Control to Prevent Data Overrun

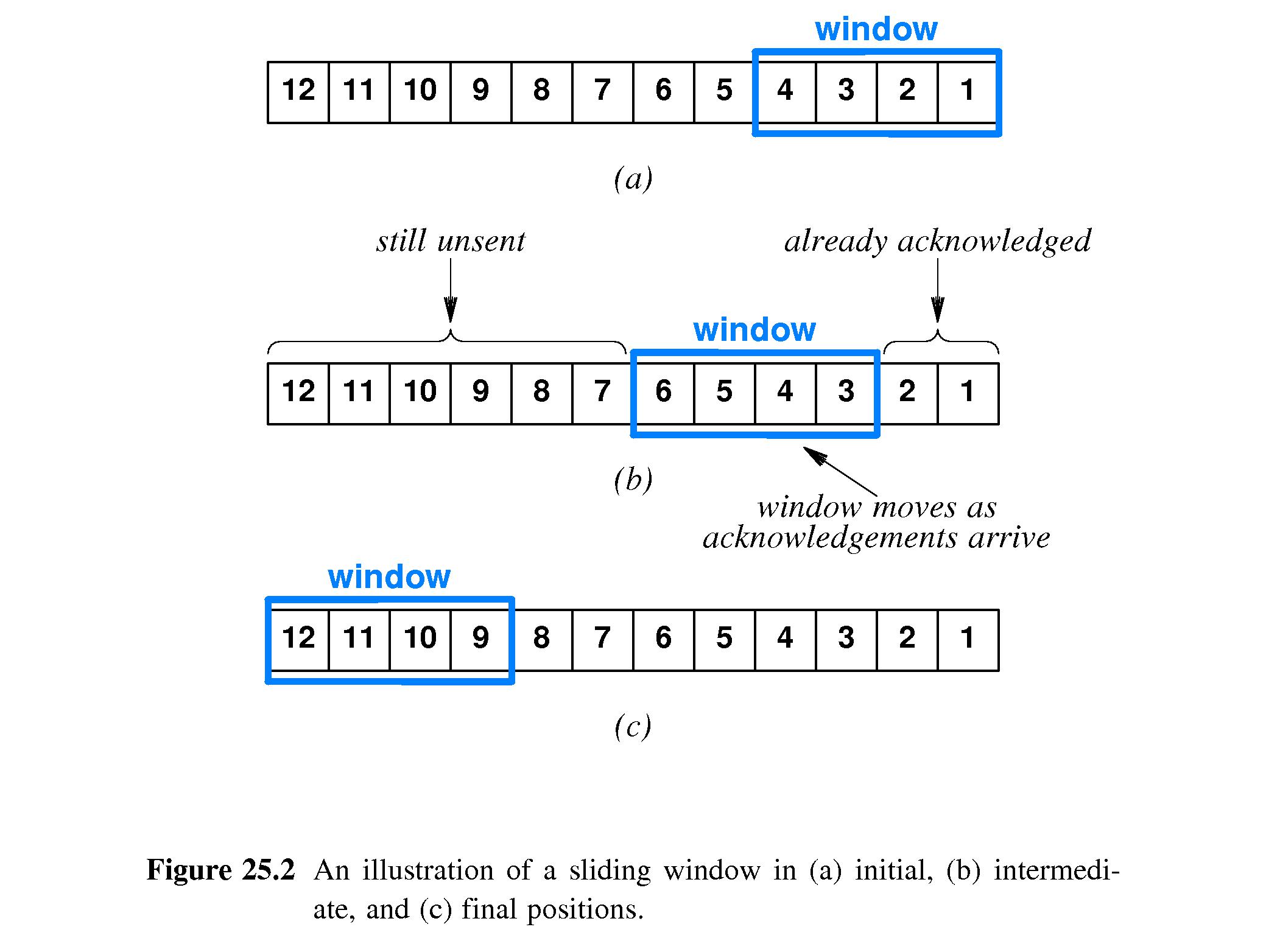

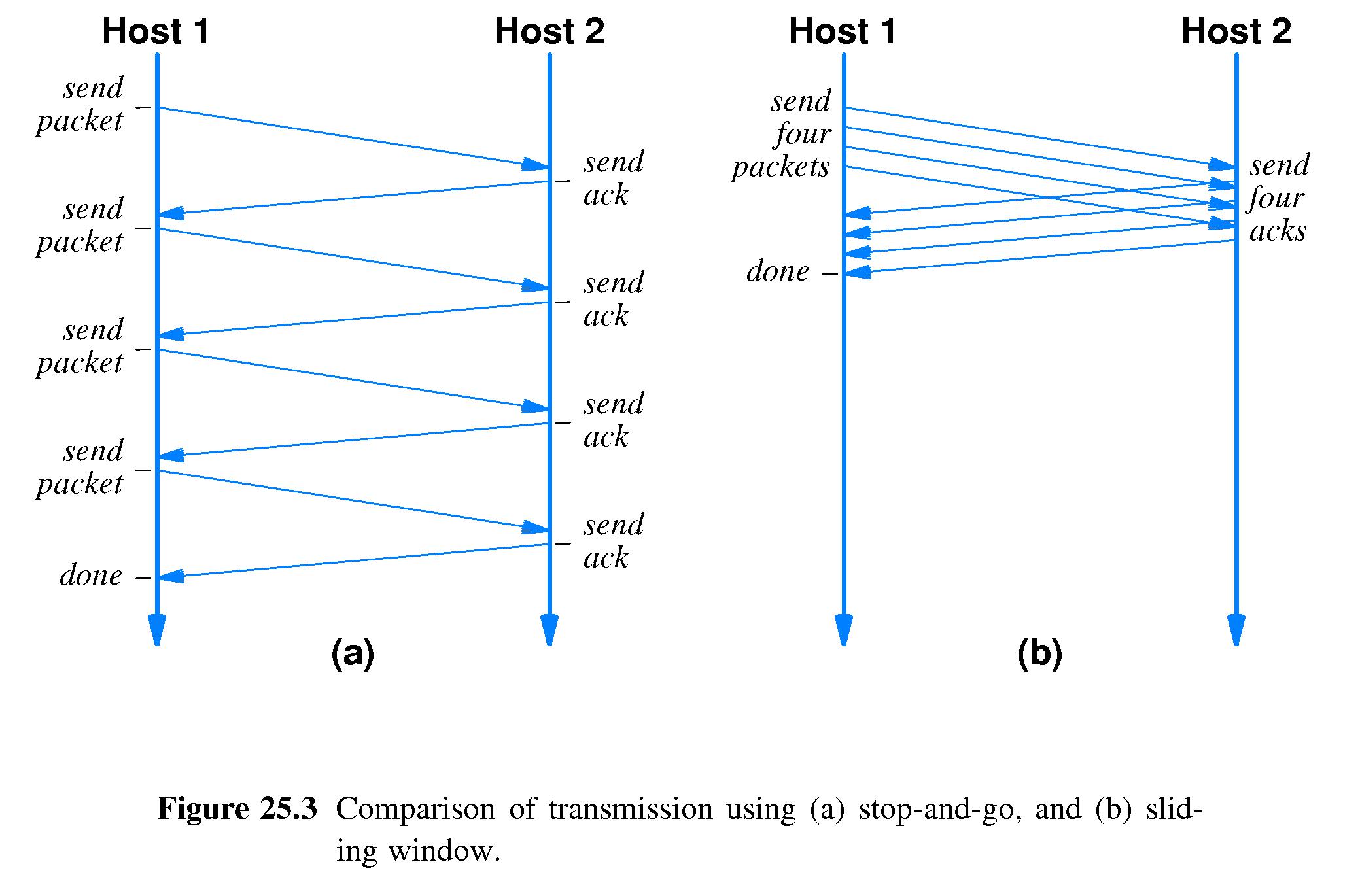

- Refer to Figures 25.2 and 25.3.

- The purpose of flow control is to prevent data

from being sent faster than receivers can process it.

- Stop-and-go protocols will do the trick - the sender

waits for each packet to be acknowledged by the receiver

before sending another packet.

- However, stop-and-go can slow senders much more than necessary

and result in unacceptably low data transfer rates.

- A sliding window scheme is likely to work as well and

produce much higher data rates. The receiver preallocates

buffer space for a certain number (the window size) of packets.

- The sender keeps track of how full the receiver's buffer is.

- The sender only sends a packet if it is sure there is room for

it in the receiver's buffer.

- Suppose the sender knows that the receiver does not keep

ACK'd packets in its buffer.

- Then it is safe for the sender to keep sending packets until the

number of un-Ack'd packets is equal to the buffer size .

- At that point, the receiver's buffer could be full, or about to

become full so the sender must pause until more packets are

ACK'd.

- Under ideal conditions, the sender can send a window's worth of

packets at a time, then receive an ACK for all of them

almost as quickly as the round-trip time of a single

message between sender and receiver.

At that point the sender can send another window's worth

of packets, and so on, repeating indefinitely. This would result

in a data rate of about

TW = Tg X W

Where TW is the data rate that can be

achieved with a sliding window of size W,

and Tg is the data rate that

can be achieved with stop-and-go processing.

- Thus under ideal conditions, if T is the

time it takes for a

datum to travel from the sender to the receiver and back, the

sender may be able to send almost a window's worth

of data in every time slot of length T.

- In contrast, using stop-and-go, the sender could only send

one packet in each time slot of

length T.

- Of course the amount the sender can send in a given time is also

limited by the available bandwidth. So the approximation above

would be more accurate if written this way:

TW = min { C, Tg X W }

where C is the maximum data rate of the communication

channel.

- 25.6 Techniques to Avoid Congestion

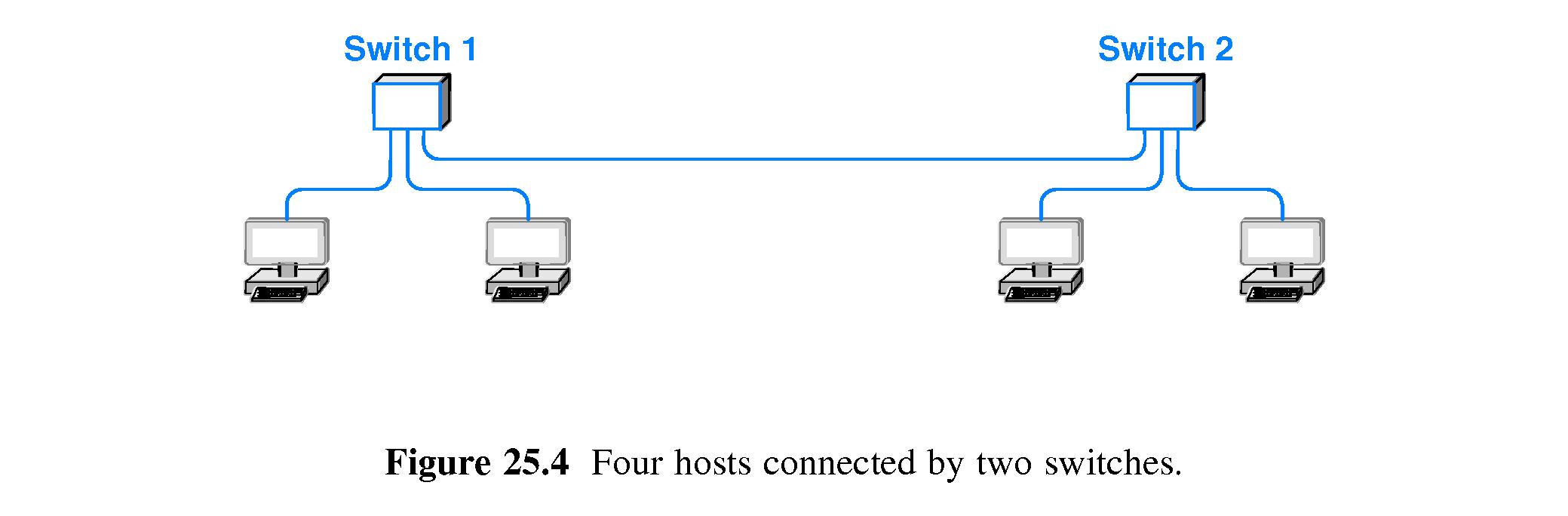

- Refer to Figure 25.4

- Congestion is a very real and constant 'threat' on today's networks.

- The basic reason is that routers and links often do not have the

ability to handle the amount of traffic that can potentially be

injected into them.

- One approach is to create a scheme wherein devices downstream send

messages back to the sources telling it to slow down.

- Sources can react a little faster if they just temporarily reduce

their window size when an acknowledgement times out. The reason is

usually congestion.

- One advantage of relieving congestion quickly is that routers and

hosts 'drop' packets when their buffers overflow. More dropped

packets mean more retransmission, hence more load on the network.

- 25.7 The Art of Protocol Design

- Protocol design details must be chosen carefully.

- Sliding Window and Congestion control algorithms are

examples of pieces of a protocol design that can potentially

interact and conflict in complex ways, because one is designed

to speed up data flow, and the other is designed to slow it down.

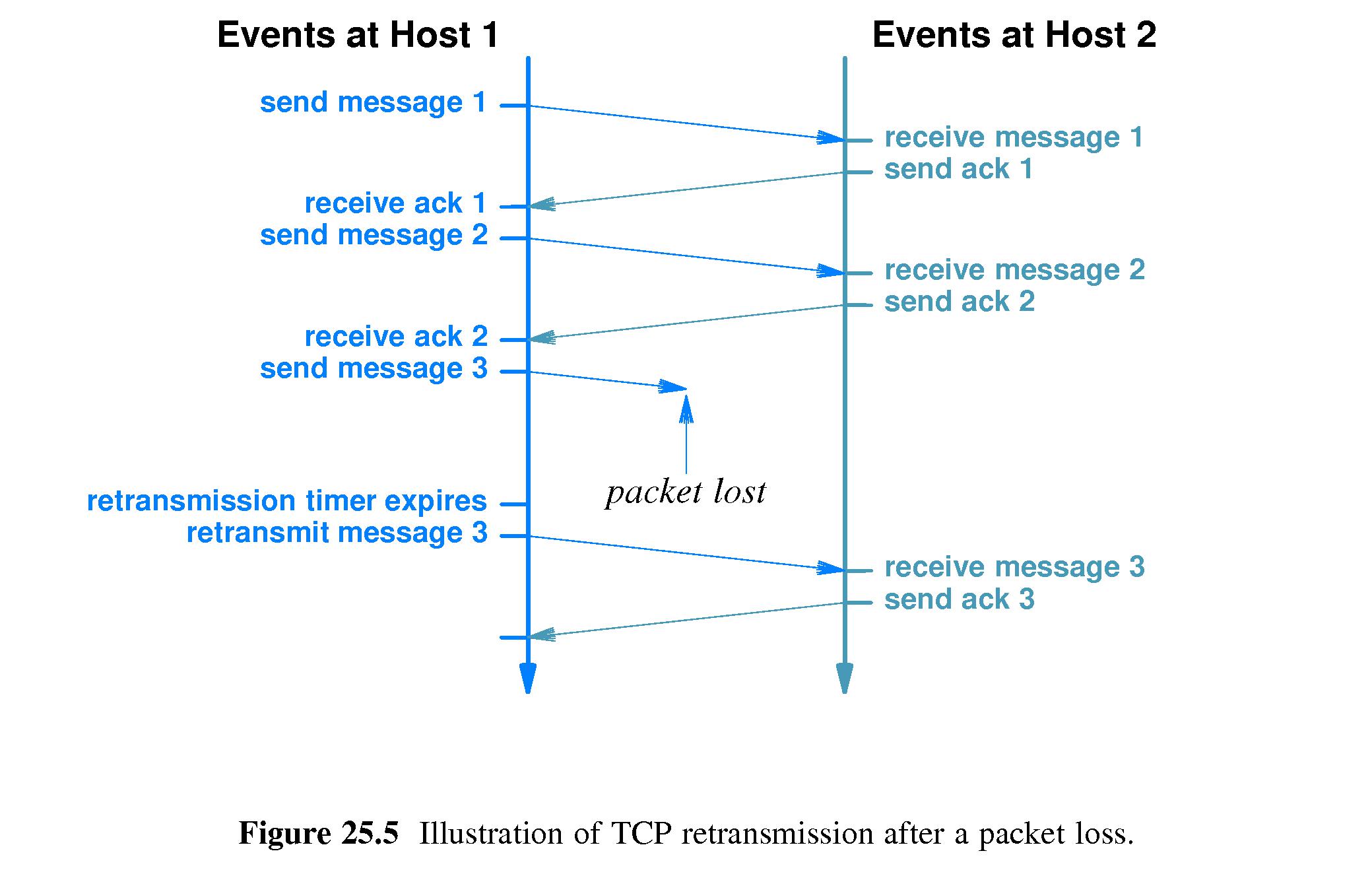

- 25.8 Techniques Used in TCP to Handle Packet Loss

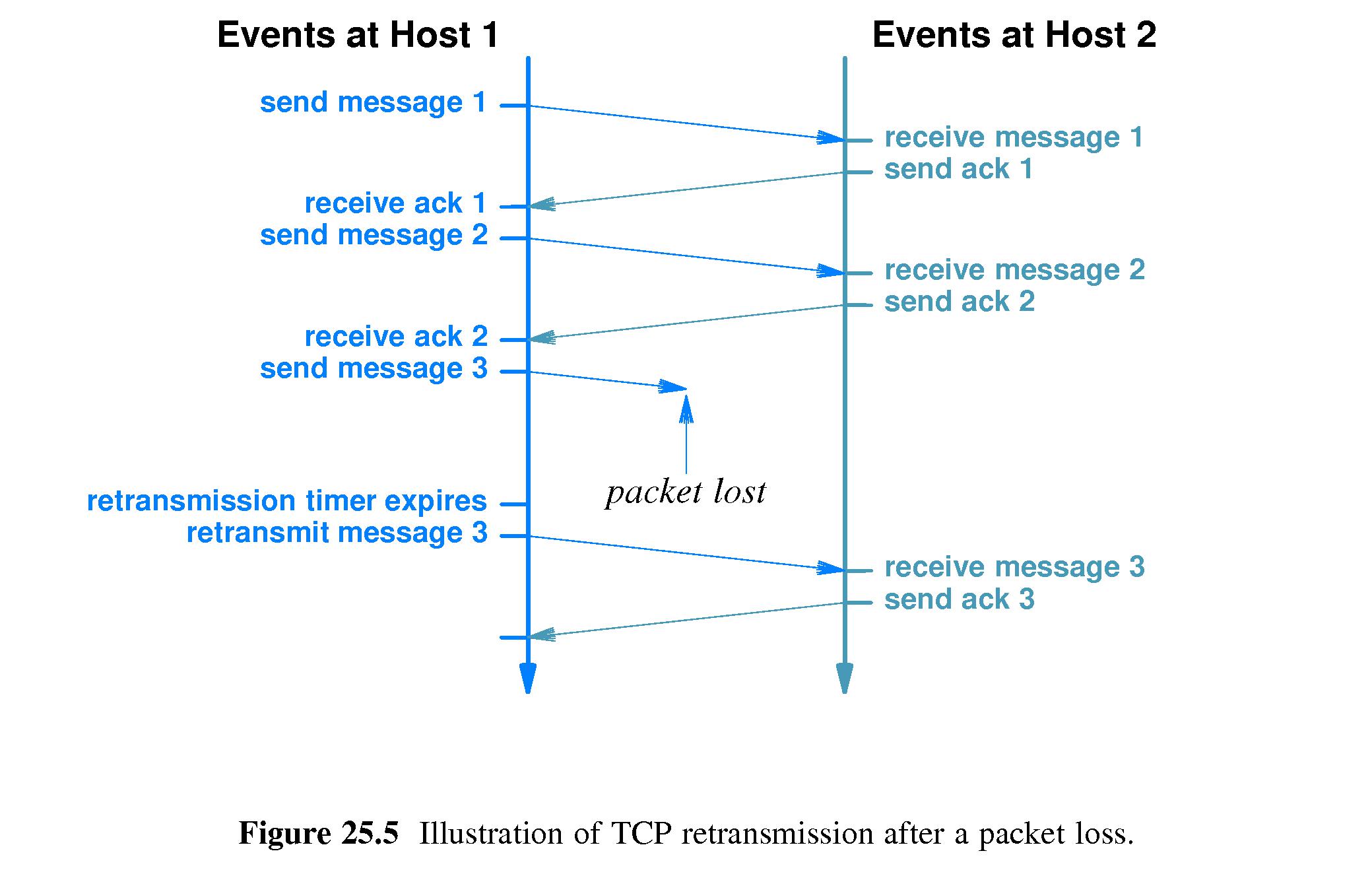

- Refer to Figure 25.5.

- In designing a protocol such as TCP, one has to be careful when

programming how long TCP will wait for and ACK before retransmitting a

packet.

- Waiting too long leads to less utilization of the network and

lost time on the sending and receiving hosts. Not waiting long enough

means unnecessarily loading the network with duplicate packets.

- To calculate a reasonable wait time, one should consider not only the

distance to the destination, but the delay due to traffic congestion.

- 25.9 Adaptive Retransmission

- TCP monitors round-trip delay on each connection and adapts

its timeout values according to changing conditions.

- TCP uses a formula to set timeouts that allows it to be conservative

during periods when delay is fluctuating.

- 25.10 Comparison of Retransmission Times

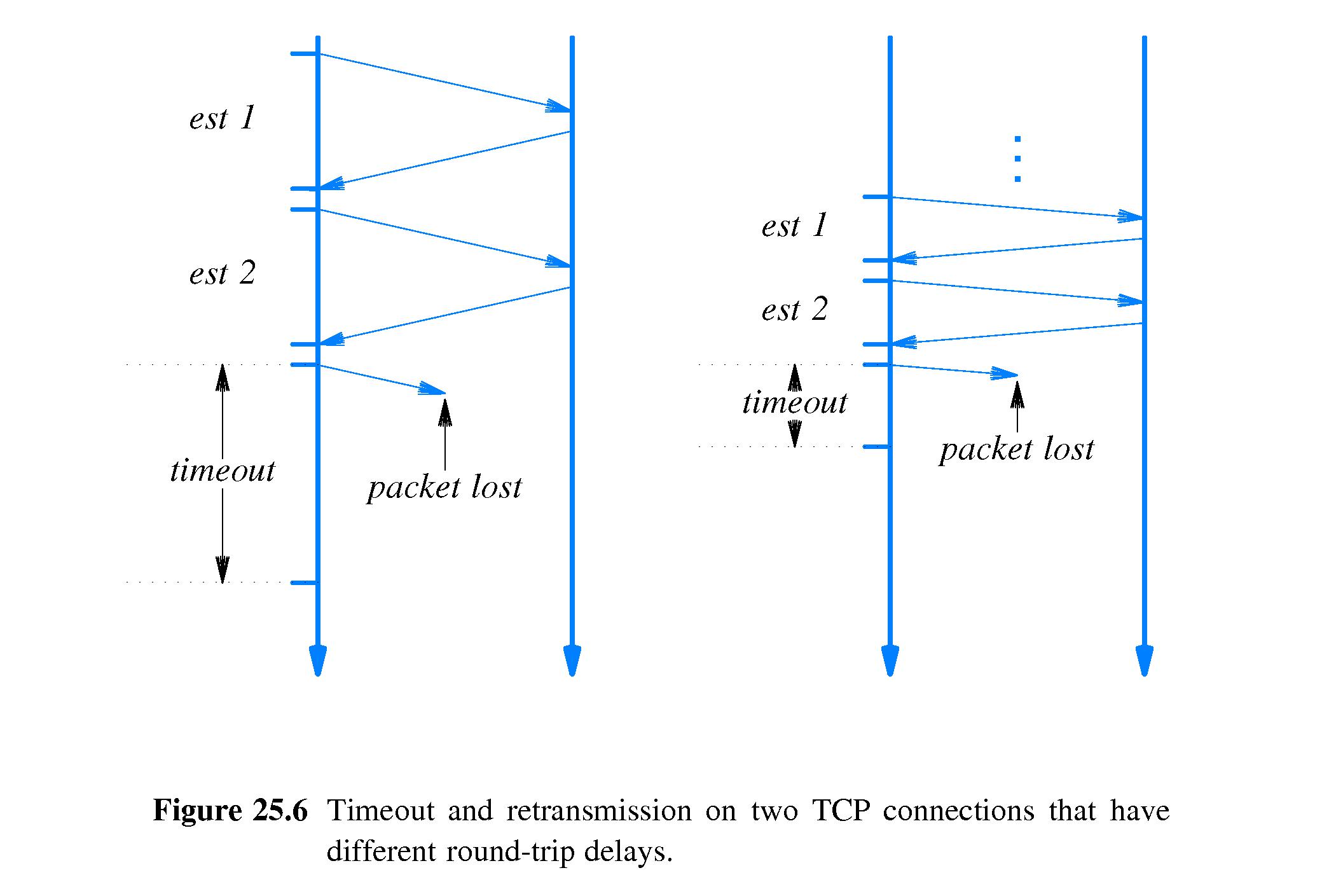

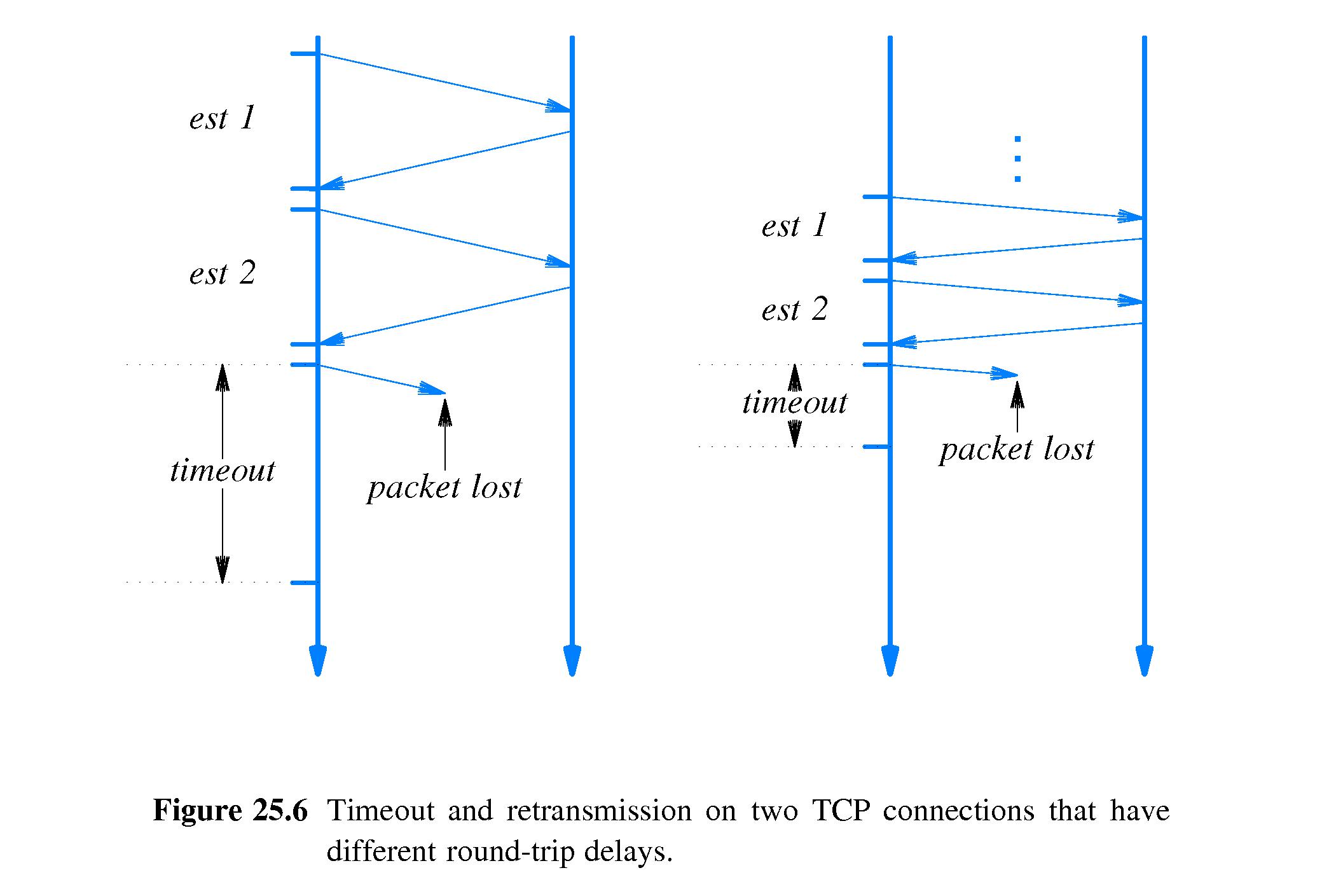

- Refer to Figure 25.6.

- During times when delay remains fairly constant, the timeout maintained

by TCP for a connection is an amount slightly higher than

the current mean round-trip delay.

- Waiting this long is enough to conclude that the packet was probably

lost. However it's not likely to be a wait that is much longer than

necessary.

- 25.11 Buffers, Flow Control and Windows

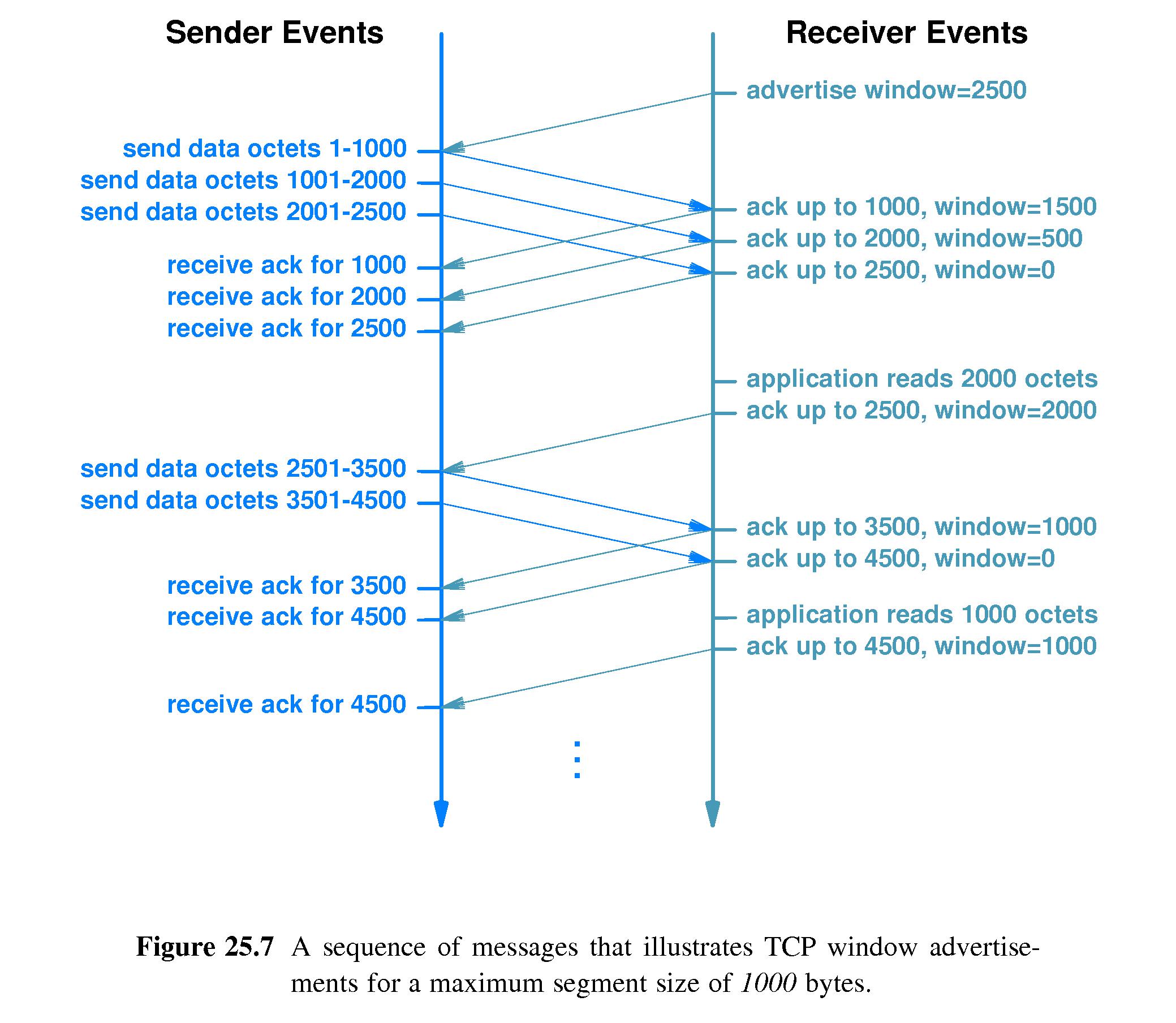

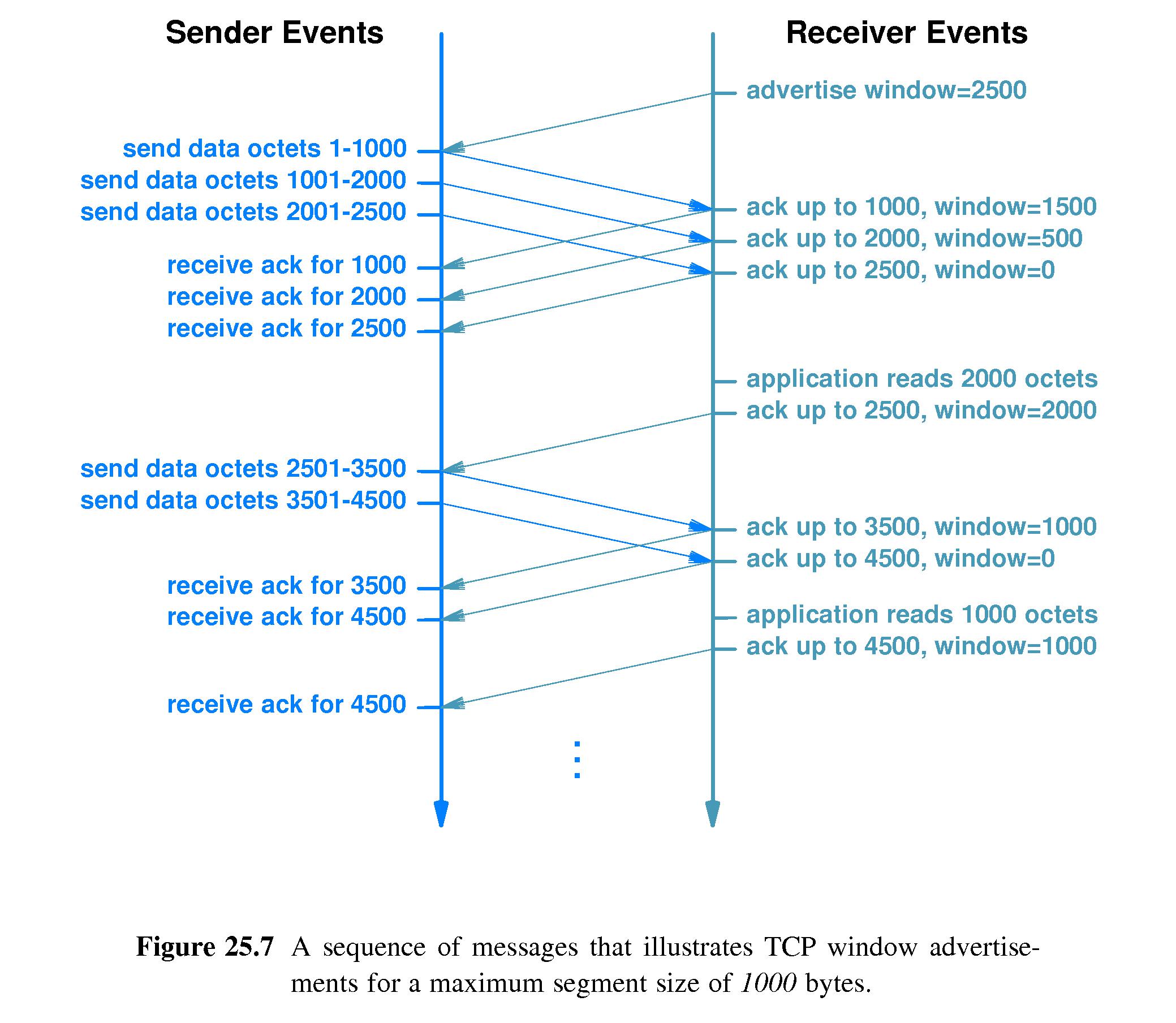

- Refer to Figure 25.7.

- TCP flow control follows the sliding window paradigm describer

earlier, but not in every detail.

- The TCP window is measured in bytes.

- TCP does not use ACK's as an indication of current window size. It

uses a separate mechanism called a window advertisement

- A receiver sends a window advertisement with each acknowledgement.

- When the receiver runs out of buffer space it advertises a zero

window

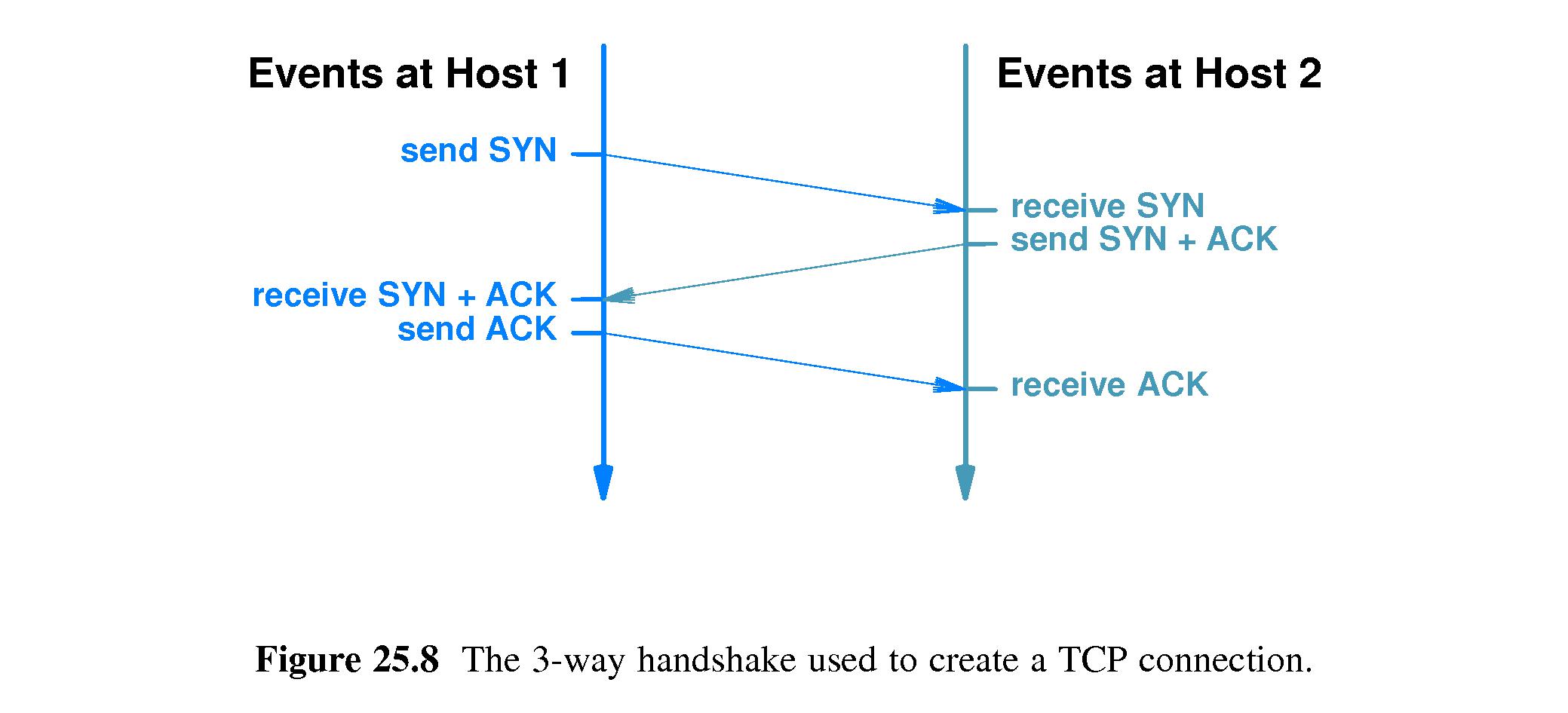

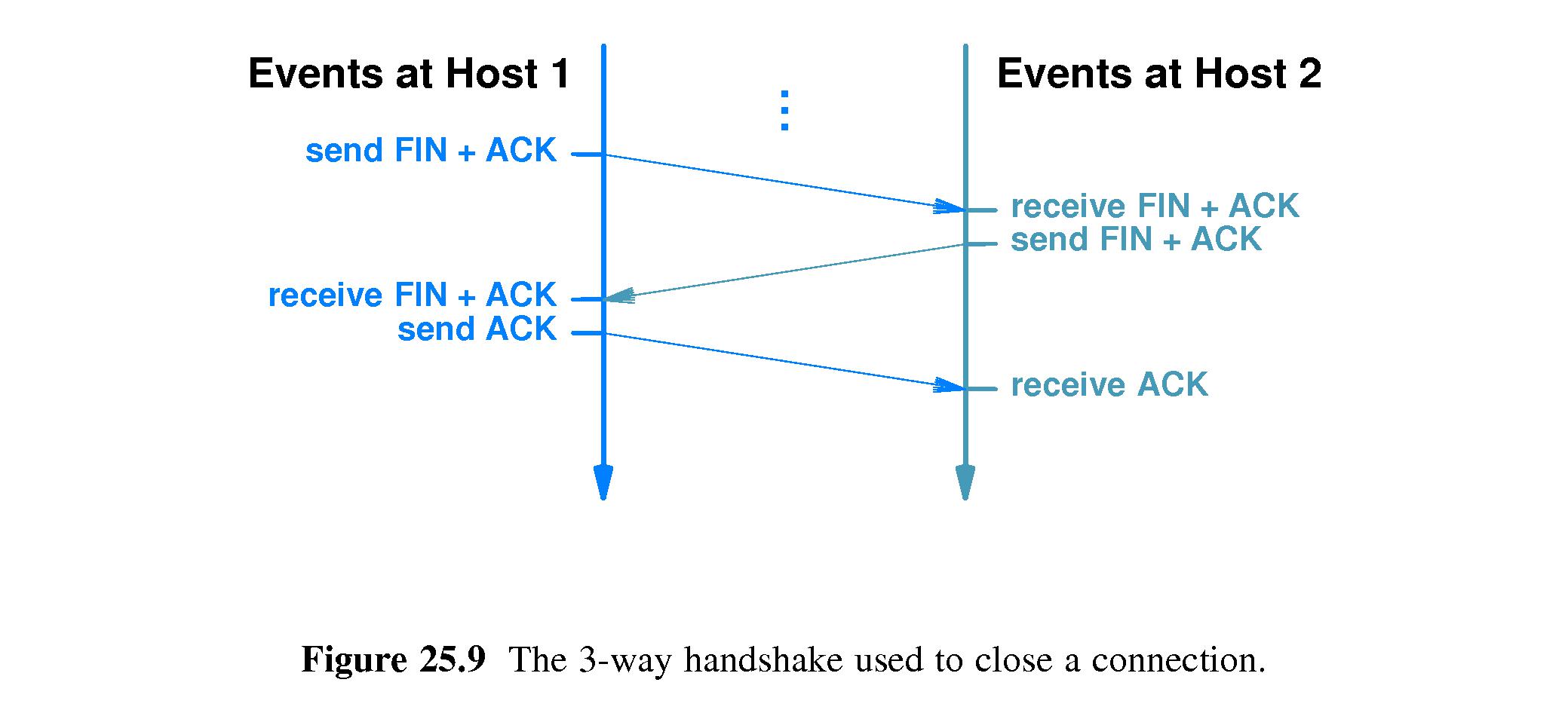

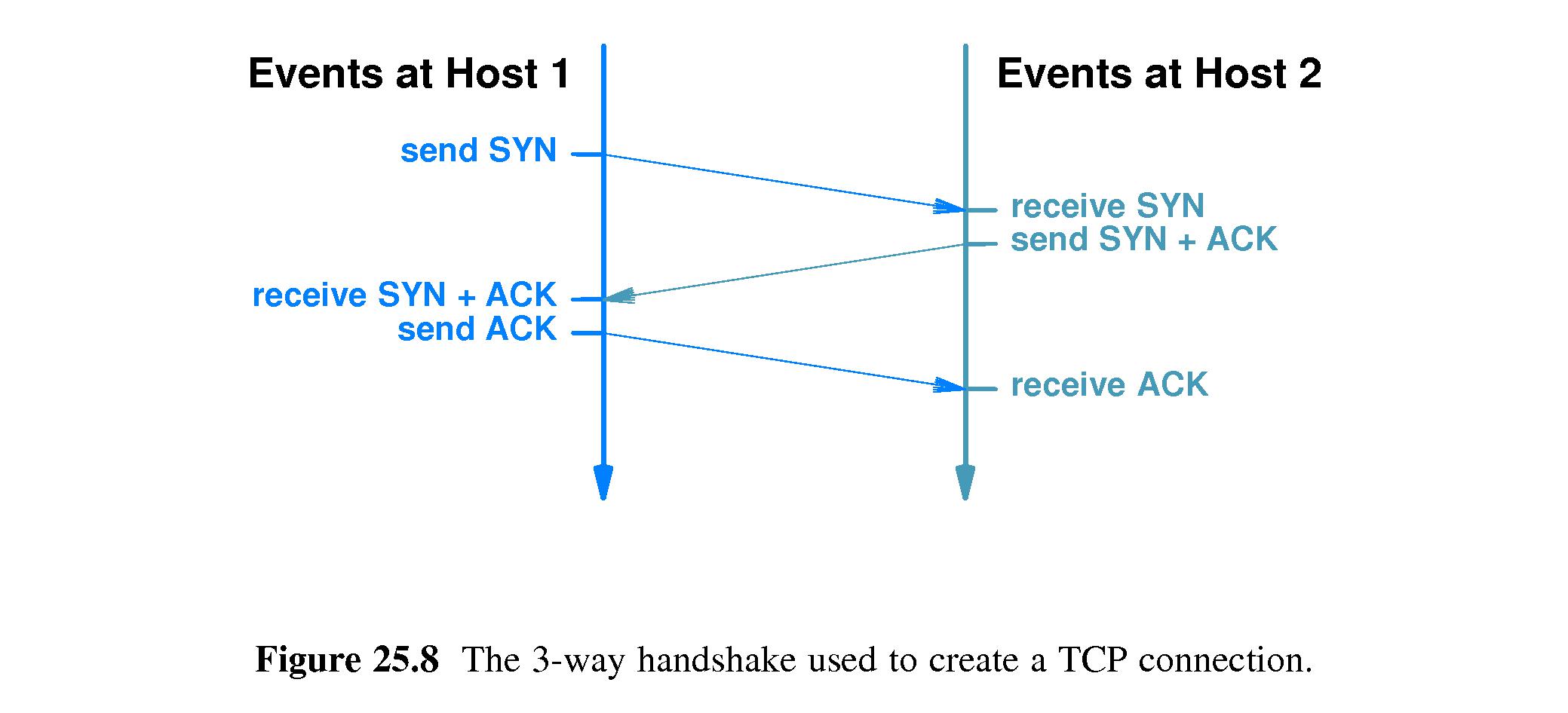

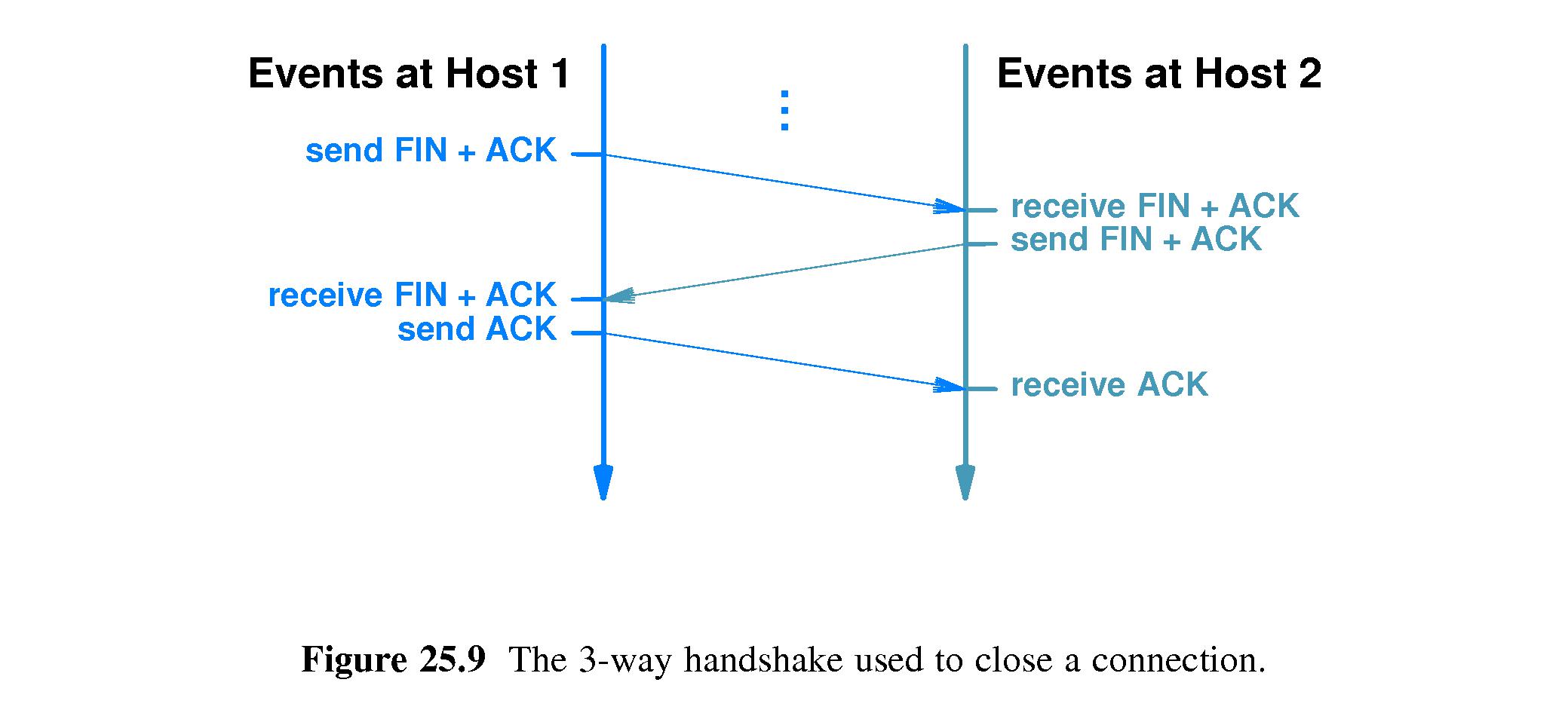

- 25.12 TCP's Three-Way Handshake

- Refer to Figure 25.8.

- Refer to Figure 25.9.

- When TCP either establishes or closes a connection, it uses a

sequence of three messages.

- These sequences are called three-way handshakes.

- When establishing a connection, each side is required to generate a

random 32-bit number called a sequence number. Sequence

numbers serve as connection identifiers for use in avoiding replay

problems.

- 25.13 TCP Congestion Control

- When starting a new connection or when a message is lost, TCP

backs off - it sends nothing for a while except one message.

- If the first message is ACK'd without loss, TCP sends two additional

messages.

- If those are ACK'd normally it sends four more, and so on until

reaching a point where it is sending half the window size.

- After that it ramps up slowly and linearly until

reaching the window size (assuming there's no sign of congestion).

- When TCP connections collectively use this slow start

methodology on the Internet, it works to alleviate congestion.

It helps avoid sending a lot of packets into congested areas.

- 25.14 Versions of TCP Congestion Control

- Versions of TCP/IP congestion control by tradition

are named after cities in Nevada.

- Most systems are now running NewReno

- 25.15 Other Variations: SACK and ECN

- (SACK)

- The idea of SACK is to allow receivers to specify

exactly which segments have to be resent.

- It turned out not to usually work any better than

the old cumulative acknowledgment

- (ECN)

- The idea of ECN is for routers to mark packets that

experience congestion and for receivers to notify

the senders about the marks when returning ACKs.

- ECN is not used much. The information tends to be

stale when it gets back to the senders.

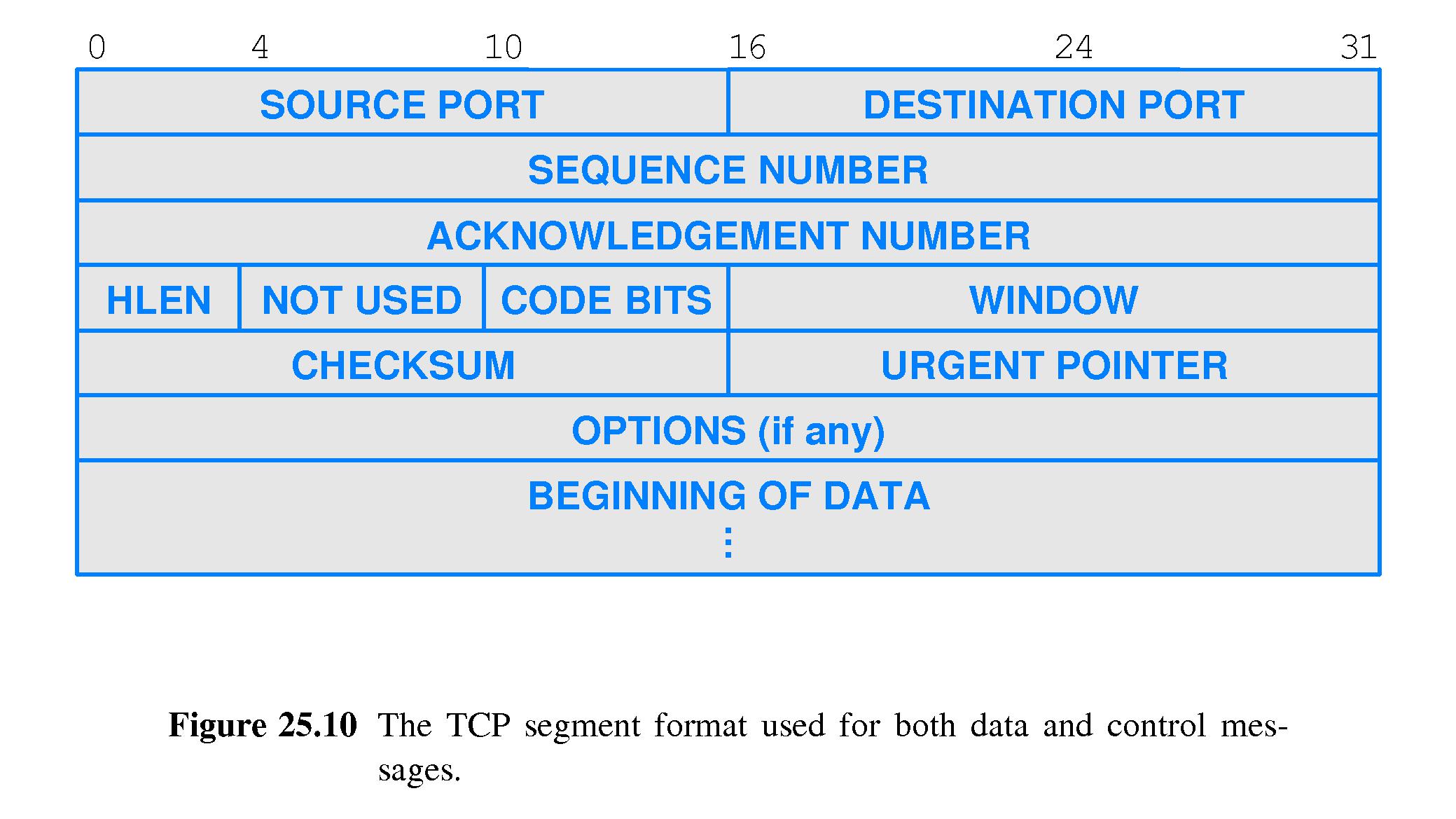

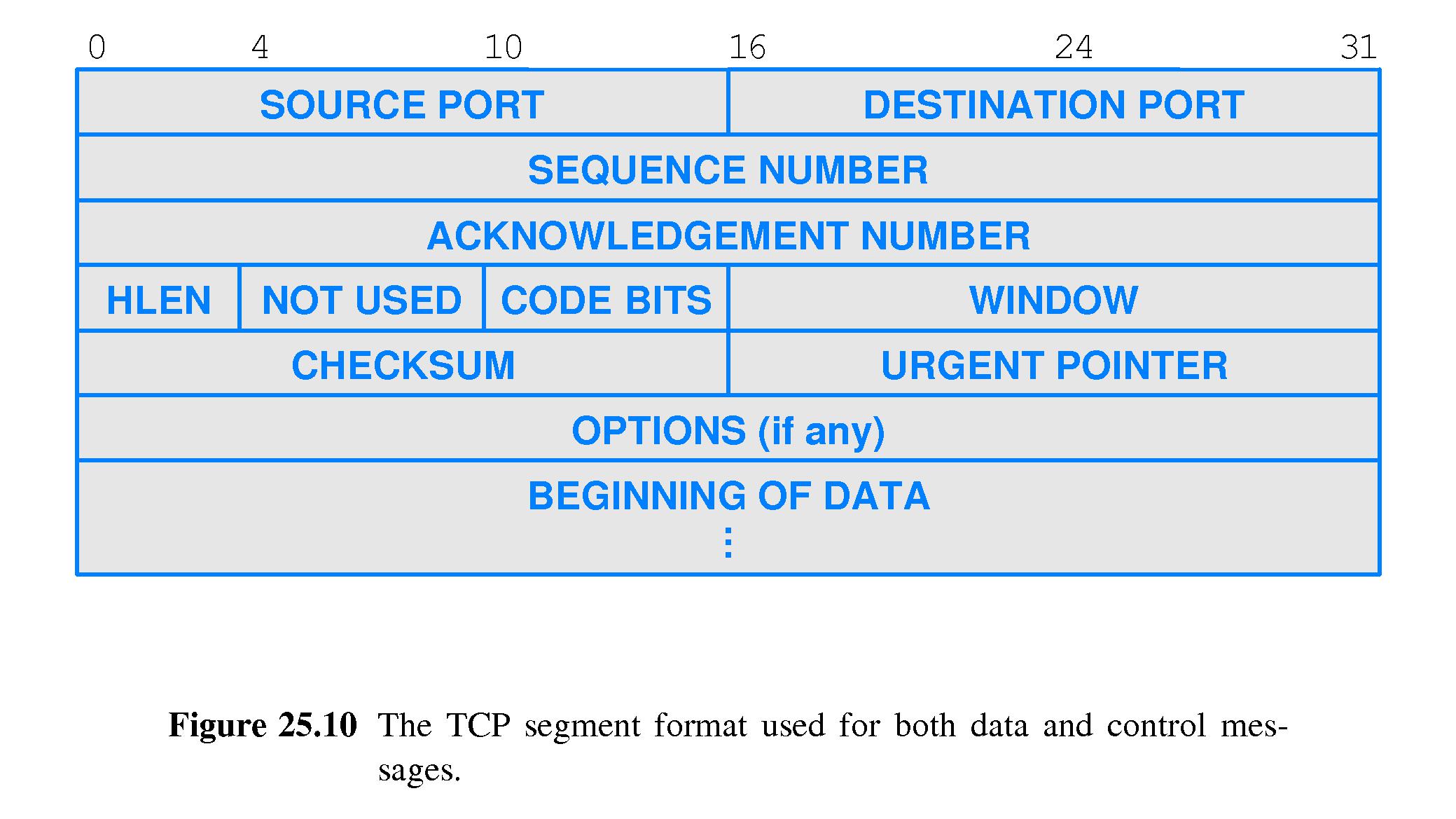

- 25.16 TCP Segment Format

- Refer to Figure 25.10.

- TCP uses the same TCP segment format for all messages.

- Some of the fields refer to the 'incoming' data stream (coming

from the DESTINATION PORT), and others to the 'outbound'

data stream (coming from the SOURCE PORT and contained in the

DATA section of the segment).

- Importantly, the SEQUENCE NUMBER field is the sequence

number of the first octet of data (payload) carried in the segment in the

outbound direction,

- the ACKNOWLEDGEMENT NUMBER is the first sequence number for which

data is missing in the incoming direction, and

- the WINDOW denotes the amount of buffer space available for incoming

data on the SOURCE host (this is the advertisement).